AI Writing Tools vs Human Writing: Real Results

AI writing tools vs human writing is no longer an abstract debate.

In 2026, it’s an operational decision that affects output speed, consistency, accuracy, and long-term reliability.

Most bloggers asking this question are asking it the wrong way.

They ask:

“Which one ranks better?”

That’s not the real comparison.

The real comparison is:

Which approach produces reliable, repeatable publishing results at scale—without breaking quality?

This article does one job only:

It compares real performance outcomes between:

- AI-written content

- Human-written content

- Hybrid content (AI + human)

Not SEO rules.

Not penalties.

Not tools.

Just performance under real blogging conditions.

If you’re still deciding which platforms actually fit different blogging workflows, start with my full breakdown of the best AI writing tools for bloggers.

What “Real Results” Actually Means (Define the Playing Field)

Before comparing AI writing tools vs human writing, you need to define what results actually mean.

This comparison measures performance across six practical dimensions:

- Output quality

- Speed to publish

- Editing effort required

- Accuracy and error rate

- Consistency across multiple posts

- Reliability at scale

If a method fails in any of these, it doesn’t work long-term.

The Three Content Production Models (Clear Separation)

Every blog on the internet fits into one of these three models.

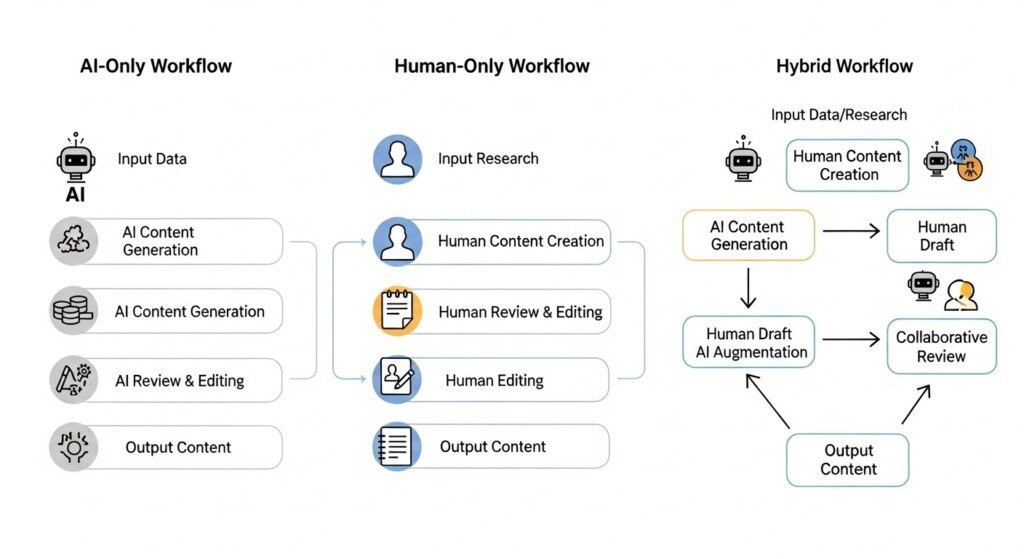

Model 1: AI-Only Content Production

This is the most common—and the most misunderstood.

AI-only content means:

- Drafting with AI

- Minimal or no human editing

- Fast publishing

- High volume

This model is chosen for speed, not quality.

Model 2: Human-Only Content Production

This is traditional blogging.

Human-only content means:

- Manual research

- Manual outlining

- Manual writing

- Manual editing

This model is chosen for control, not scale.

Model 3: Hybrid Content Production (AI + Human)

This is what serious publishers actually use.

Hybrid content means:

- AI handles drafting and expansion

- Humans handle structure, judgment, accuracy, and refinement

This model is chosen for performance balance.

Everything below compares these three models—nothing else.

Hybrid workflows work best when paired with platforms designed for rankings, not just text generation, which is why choosing the right AI writing tools for SEO directly impacts results.

Output Quality Comparison (What Comes Out the Other End)

AI-Only Output Quality

AI writing tools are excellent at surface-level completeness.

Strengths:

- Clean grammar

- Logical paragraph structure

- Consistent formatting

- Predictable tone

Weaknesses:

- Generic explanations

- Repetitive phrasing

- Weak conclusions

- Limited nuance

- Shallow insight

AI output often looks finished but feels empty when read carefully.

Human-Only Output Quality

Human writing excels at depth and originality.

Strengths:

- Natural flow

- Strong opinion

- Real examples

- Contextual nuance

- Authority tone

Weaknesses:

- Inconsistent quality

- Structural mistakes

- Variable clarity

- Fatigue over long projects

Humans write better individual articles—but struggle to maintain that quality repeatedly.

Hybrid Output Quality

Hybrid content removes the weaknesses of both.

Strengths:

- Structured drafts from AI

- Original insights from humans

- Cleaner conclusions

- Better explanations

- Consistent quality across posts

This is the only model that maintains quality past 20–30 posts.

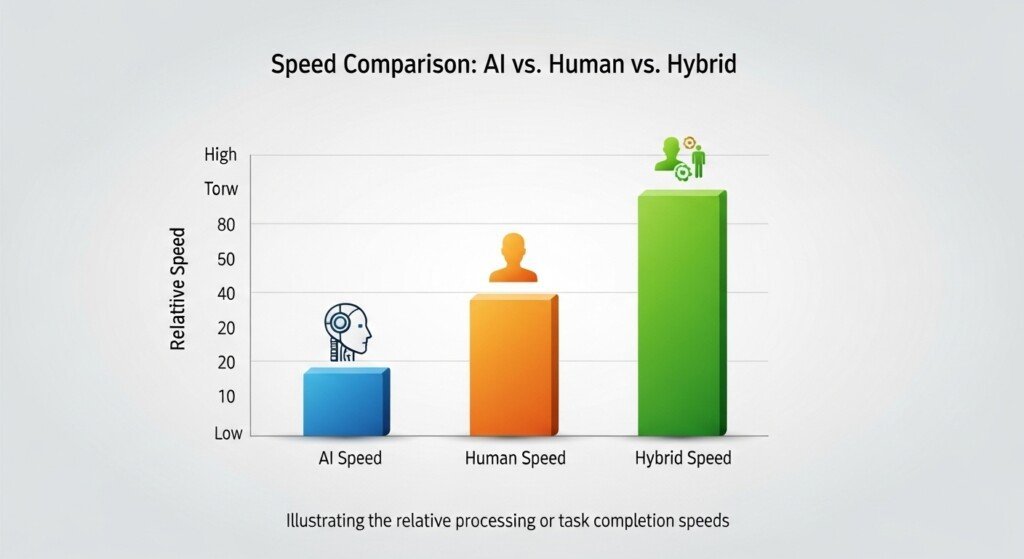

Speed Comparison (Time-to-Publish Reality)

Speed matters more than talent in modern blogging.

AI-Only Speed

Fastest by far.

Typical workflow:

- Prompt → draft → publish

Time per post:

- 10–30 minutes

Problem:

Speed comes at the cost of editorial judgment.

Human-Only Speed

Slowest by far.

Typical workflow:

- Research → outline → write → edit → publish

Time per post:

- 4–8 hours (sometimes more)

Problem:

Speed collapses under volume.

Hybrid Speed

Balanced and sustainable.

Typical workflow:

- AI draft → human refine → publish

Time per post:

- 60–120 minutes

This is the only speed profile that scales without quality collapse.

Editing Effort Required (Hidden Cost Most Ignore)

AI-Only Editing Load

Ironically, AI-only content often needs more editing, not less.

Why?

- Repetition

- Over-explanation

- Weak transitions

- Artificial tone

Most bloggers skip editing to save time—this is where performance collapses.

Human-Only Editing Load

Lower per sentence, higher per article.

Humans edit as they write, but:

- Miss structure flaws

- Miss redundancy

- Miss optimization opportunities

Editing quality depends entirely on the writer’s discipline.

Hybrid Editing Load

Lowest overall effort.

Why?

- AI removes blank-page friction

- Humans focus only on refinement

- Editing becomes targeted, not exhaustive

This is editorial efficiency, not laziness.

Accuracy and Error Rate (Quiet Performance Killer)

AI-Only Accuracy

AI writing tools:

- Hallucinate facts

- Confidently state wrong information

- Miss edge cases

- Oversimplify complex topics

Unchecked AI content accumulates small errors that destroy credibility.

Human-Only Accuracy

Humans are better at:

- Contextual accuracy

- Domain judgment

- Spotting nonsense

But humans also:

- Assume things

- Rely on memory

- Skip verification when tired

Hybrid Accuracy

Hybrid workflows catch errors fastest.

AI drafts → humans verify.

Accuracy improves without slowing production.

Consistency Across Multiple Posts (Where Most Blogs Die)

One good article doesn’t matter.

Consistency does.

AI-Only Consistency

High structural consistency.

Low qualitative consistency.

Posts start to:

- Sound the same

- Reuse phrases

- Lose personality

Readers notice.

Human-Only Consistency

Low consistency over time.

Quality fluctuates based on:

- Mood

- Energy

- Available time

This kills publishing schedules.

Hybrid Consistency

Best of both worlds.

- AI ensures structure

- Humans ensure voice

This is how publishers maintain brand tone across 50+ posts.

Reliability at Scale (The Final Test)

Scale exposes everything.

AI-Only at Scale

Fails fastest.

Symptoms:

- Content fatigue

- Similarity issues

- Reader disengagement

- Declining trust

Human-Only at Scale

Burns out.

Symptoms:

- Missed deadlines

- Reduced output

- Inconsistent quality

- Abandoned blogs

Hybrid at Scale

Survives.

Symptoms:

- Stable publishing

- Predictable quality

- Sustainable workload

- Editorial control

This is why hybrid workflows dominate real publishing environments.

Interim Conclusion (Lock This In)

AI writing tools vs human writing is not a winner-takes-all contest.

Pure AI fails on trust.

Pure human fails on scale.

Hybrid wins on performance stability.

Trustworthiness Under Real Readers (Not Theory)

Trust is where most AI-vs-human debates collapse into nonsense.

Trust is not:

- “Does Google trust this?”

- “Does it look human?”

Trust is:

- Does a real reader believe this content after reading 3–5 posts from the same site?

AI-Only Trust Performance

AI-only content fails trust quietly, not instantly.

Early-stage:

- Readers don’t notice

- Content seems fine

- Bounce rates look normal

Mid-stage (after multiple posts):

- Tone feels repetitive

- Explanations feel safe and generic

- No strong positions

- No lived experience

Result:

- Readers stop returning

- Brand loyalty never forms

- Authority plateaus

AI-only content is acceptable, not believable.

Human-Only Trust Performance

Human-written content builds trust faster.

Why:

- Opinionated language

- Clear confidence

- Unique phrasing

- Real judgment

But there’s a catch:

Trust is tied to output consistency.

When publishing slows or quality drops:

- Trust erodes

- Momentum dies

- Readers forget the site exists

Trust without consistency doesn’t compound.

Hybrid Trust Performance

Hybrid content produces repeat trust.

Why:

- AI maintains publishing rhythm

- Humans maintain authority tone

- Readers see consistency and credibility

This is the only model that builds:

- Return readers

- Perceived expertise

- Brand reliability

Trust is not about who writes.

It’s about who controls the final output.

Reliability After 50–100 Posts (The Breaking Point)

Most blogs never reach this stage.

Those that do expose their production model immediately.

AI-Only After 50+ Posts

Common failure signals:

- Posts blur together

- Topics feel recycled

- Language patterns repeat

- Reader engagement drops

This is not a penalty problem.

It’s a content fatigue problem.

AI-only systems are not designed to self-correct.

Human-Only After 50+ Posts

Human-only blogs fail differently.

Failure signals:

- Publishing gaps

- Inconsistent depth

- Writer burnout

- Abandoned series

Quality may still be high — but output collapses.

Hybrid After 50+ Posts

Hybrid workflows stabilize.

Why:

- AI absorbs drafting load

- Humans conserve decision-making energy

- Editing becomes surgical, not exhausting

This is where hybrid workflows separate professionals from hobbyists.

When AI Clearly Outperforms Humans

This matters. Be honest.

AI wins when the task is:

- Repetitive informational writing

- Structural consistency

- Content updates and rewrites

- Expanding existing sections

- Maintaining formatting across many posts

AI is superior at:

- Speed

- Volume

- Pattern execution

- Structural discipline

If a human insists on doing these manually, they are inefficient.

When Humans Clearly Outperform AI

Equally important.

Humans win when the task requires:

- Judgment calls

- Strong opinions

- Risk-taking language

- Experience-based explanation

- Authority positioning

- Sensitive or nuanced topics

AI avoids:

- Responsibility

- Strong claims

- Uncertainty

Humans do not.

This is why editorial control must remain human.

The Real Performance Difference (This Is the Core Insight)

The real difference in AI writing tools vs human writing is not quality.

It is failure mode.

- AI fails by sounding empty

- Humans fail by stopping

Hybrid workflows fail slowest.

That is the only metric that matters long-term.

Performance Comparison Table (Operational View)

| Factor | AI-Only | Human-Only | Hybrid |

|---|---|---|---|

| Draft speed | Excellent | Poor | Good |

| Consistency | High (structure) | Low | High |

| Authority tone | Weak | Strong | Strong |

| Scalability | High | Low | High |

| Burnout risk | None | High | Low |

| Reader trust | Medium | High | High |

| Long-term reliability | Low | Medium | High |

Hybrid is not “best in theory”.

It is best under pressure.

Why Most Bloggers Still Get This Wrong

Because they think the decision is philosophical.

It isn’t.

It’s operational.

They ask:

“Should I use AI or write myself?”

The correct question is:

“Which parts should never be automated, and which should never be manual?”

Most bloggers automate judgment and manually do grunt work.

That’s backward.

The Correct Role Assignment (Lock This In)

AI Should Handle:

- Drafting

- Expansion

- Reformatting

- Updating

- Rewriting

- Speed-sensitive work

Humans Should Handle:

- Structure decisions

- Topic prioritization

- Opinion

- Accuracy

- Tone

- Final approval

Once you understand this, the debate ends.

Final Verdict: AI Writing Tools vs Human Writing (Real Results)

There is no winner.

There is only correct role separation.

- AI-only content fails quietly

- Human-only content fails from exhaustion

- Hybrid content survives, compounds, and scales

The blogs that grow in 2026 are not choosing sides.

They are designing systems.

If your workflow:

- Relies entirely on AI → it will hollow out

- Relies entirely on humans → it will stall

If your workflow:

- Uses AI for execution

- Uses humans for judgment

You win.

That’s the real result.

Once performance and scalability are understood, the real decision becomes economic, which I break down in detail when answering is AI writing worth it for bloggers.

Bottom Line

AI didn’t replace writers.

It exposed inefficient ones.

Human writing didn’t lose value.

It became too expensive to waste.

The future is not AI content.

The future is human-controlled AI production.

FAQ

1. Do AI writing tools outperform human writers in SEO rankings?

No. AI writing tools do not automatically outperform human writers in SEO. Rankings depend on content structure, search intent alignment, originality, and editorial quality. AI improves speed and consistency, but human judgment is still required to produce content that ranks reliably.

2. Can AI-written content rank without human editing?

In most cases, no. Raw AI-generated content lacks depth, originality, and strong trust signals. Without human editing, content often becomes repetitive, thin, or misaligned with search intent, which limits ranking potential.

3. Is hybrid content (AI + human) better than human-only writing?

Yes, in most real-world blogging scenarios. Hybrid workflows combine AI’s drafting speed with human oversight, resulting in consistent output, better structure, higher originality, and stronger long-term performance compared to human-only writing.

4. Does Google treat AI content differently from human-written content?

No. Google does not rank content based on whether it was written by AI or a human. Google evaluates usefulness, originality, clarity, depth, and relevance. Low-quality content is penalized regardless of how it was created.

5. What is the biggest mistake bloggers make when using AI for SEO content?

The biggest mistake is publishing AI content without editorial control. Using AI for drafting is effective, but skipping structure decisions, rewriting, internal linking, and intent alignment leads to poor rankings and unstable performance.

Disclaimer

Some links on this page may be affiliate links.

If you click and purchase, I may earn a small commission at no extra cost to you.

I only recommend tools I genuinely use or believe are useful for bloggers.

This helps support the site and keep content free.